September 25, 2012-By Robert McMillan in Wired. Editor’s note: This page has long advocated substantial increases in energy efficiency as well as increased use of electronic files to mitigate the use of paper across society. The Times report linked below and this story reflect on why there is a perceived need to build more and more power plants using increased fossil fuels when there might be other answers that will save us both money and the planet.

When The New York Times published the first part of its sweeping investigative report on the state of the world’s data centers, it led with a six-year-old story about Facebook dispatching engineers to local Walgreens stores in a desperate bid to cool down the 40- by 60-foot rental that was home to the company’s servers.

When The New York Times published the first part of its sweeping investigative report on the state of the world’s data centers, it led with a six-year-old story about Facebook dispatching engineers to local Walgreens stores in a desperate bid to cool down the 40- by 60-foot rental that was home to the company’s servers.

The report — which ran on the front page of the paper this Sunday, above the fold — then proceeded to describe an extremely wasteful industry that’s “sharply at odds with its image of sleek efficiency and environmental friendliness.”

Yes, data centers are wasteful. But Facebook, for one, has evolved over those six years — significantly. And that’s no secret. Largely lost amid the Times’ multiple-page analysis is the fact that the data centers built by a handful of internet giants are looking less and less like the 40- by 60-foot rental space that was home to Facebook back in 2006 — and that these advances are just beginning to trickle down to the rest of the industry.

Facebook engineers no longer forage for fans. They think about how to optimize undersea cable routes. They design brand-new ways of storing data with some of the power turned off. They even design their own servers. And Facebook is now sharing many of its designs with the rest of the world.

Companies like Google and Apple and Amazon are also building a new generation of data centers that look nothing like that 40- by 60-foot overheated Facebook cage from 2006. But they’re doing most of this work in secret. “We generally don’t give out — for competitive advantage — a lot of details,” says an engineer with a large internet company who, well, wasn’t authorized to speak with the press. In fact, none of the big internet companies we reached out to could provide us with on-the-record comment by deadline Monday.

That makes it tricky to write an investigative expose on the industry. The stuff that companies will talk about is often so out-of-date that it’s obsolete.

The Times asked McKinsey & Co. to study data-center energy use, and the consulting firm found that “on average, they were using only 6 percent to 12 percent of the electricity powering their servers to perform computations,” the newspaper reported.

That’s pretty much in line with what McKinsey was saying back in this 2008 PowerPoint presentation, where it found “6 percent average server utilization” overall and that 80 percent of servers were running at between 5 and 30 percent utilization. But at the biggest data-center operators, things are changing in so many other ways.

For awhile, the big web companies simply threw servers at the problem, building out quickly and cheaply, but in the past few years, some of the most interesting and exciting innovations at Google and others have been happening quietly and out of the public’s view

Google is the undisputed leader in this new trend. It surprised some earlier this year when it disclosed that it makes networking gear for its data centers and has now become one of the largest computer builders in the world.

“Google is a computer company,” says Andrew Feldman, the founder of Seamicro, a new-age server maker now owned by AMD and a former executive with a company that supplied networking gear to Google. “What they build are computers that are larger than football fields.”

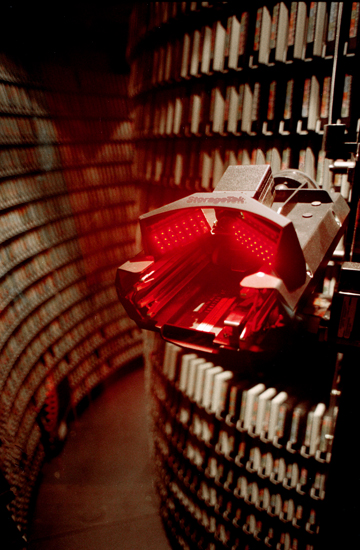

These are essentially a new generation of supercomputers that serve up our mail messages and movies and photographs. They’re built by the web giants, who make money by squeezing extra efficiency out of their data centers. And they’re on one side of a growing data-center digital divide. On the other side are the more traditional enterprise data centers, run by the geeks with the pocket protectors in the Fortune 500.

Apple can build massive solar-power arrays and use biogas to generate energy. Google can strip out power-hungry components and build much more efficient switches and computers. But for your average corporate engineer, that’s not an option.

“If you think about an enterprise IT shop, an IT shop doesn’t have the luxury of building every piece of technology that goes with their data center,” says John Engates, chief technology officer with Rackspace, a company that runs big data centers and sells computing power to corporations. “They really have to take what they’re given, and what they’re given comes from hundreds of places around the business.”

It’s leading to a new kind of digital divide, says Feldman.

“The digital divide is among those who have production infrastructure, which means that their infrastructure produces profit, and everybody else,” says Feldman. “What Google does today, the rest of the industry — read middle America, mainstream companies — will be implementing in five to seven years.”

It’s an open question whether, five years from now, mainstream America will take advantage of these new data-center techniques by simply moving to the cloud — and buying computer power from companies like Google or Amazon — or whether the secrets of Silicon Valley will trickle out to the rest of the world one engineer at a time.